Alright, buckle up folks, because today I’m spilling the beans on my little “big boob ph” adventure. Now, before your mind goes completely into the gutter, let me clarify: We’re talking about playing around with Python’s image processing capabilities, specifically targeting… well, let’s just say, enhancements. You get my drift? No actual boobs involved, just some pixels getting a makeover.

It all started innocently enough. I was bored. Scrolling through Reddit, as you do, and stumbled upon a thread where people were using AI to “improve” photos. Curiosity piqued, I thought, “Hey, I’m a somewhat competent coder, I can probably whip something up that’s equally ridiculous.”

- Step 1: Image Acquisition (aka Finding a Suitable Victim)

First things first, I needed an image. Obviously, I wasn’t going to use any real photos of anyone. That’s just creepy. So, I hopped onto a stock photo site and found a picture of a mannequin. Perfect! No consent issues, no real-life consequences, just pure, unadulterated digital manipulation.

- Step 2: Setting up the Environment (aka Wrangling the Anaconda)

Next up, I needed my arsenal. I’m a big fan of Anaconda, so I fired that up and created a new environment. Then, I installed the usual suspects: opencv-python for image processing, numpy for number crunching (because, math!), and scikit-image because why not?

- Step 3: The “Enhancement” Algorithm (aka Trial and Error with Pixels)

This is where things got interesting. My initial plan was to use some kind of AI-powered magic. But I quickly realized that I didn’t have the time or the processing power to train a neural network on boob-enhancing data. So, I went the old-school route: good ol’ fashioned image filtering and reshaping.

I started by trying to identify the area of interest. This involved a lot of manual tweaking with OpenCV’s contour detection. I’d load the image, convert it to grayscale, apply a blur, and then try to find the contours that resembled… you know… the area. It was a lot of fiddling with parameters like threshold values and minimum contour area.

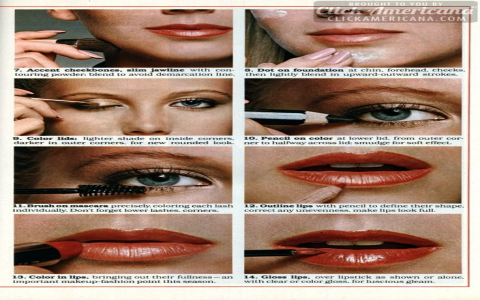

Once I had the contour, I could isolate that region and apply various transformations. I experimented with:

- Resizing the region slightly.

- Applying a subtle Gaussian blur.

- Adjusting the contrast and brightness.

- Adding a tiny bit of perspective distortion (to give it that “lifted” look).

Each of these steps required a lot of trial and error. I’d tweak a parameter, run the code, and then stare at the result, cringing or chuckling depending on how it turned out. Let’s just say there were a lot of iterations where the mannequin ended up looking like it had been stung by a bee.

- Step 4: The Grand Finale (aka Merging and Masking)

Finally, after hours of tinkering, I had something that was… passable. It wasn’t perfect, by any means. But it was definitely an “enhancement.” The final step was to merge the modified region back into the original image. This involved creating a mask to blend the edges seamlessly, so it wouldn’t look like I’d just pasted a blob onto the mannequin’s chest.

The code itself? A chaotic mess of OpenCV functions, NumPy arrays, and magic numbers. I wouldn’t inflict it on anyone. It’s a testament to the power of copy-pasting from Stack Overflow and sheer stubbornness.

The Result?

Well, let’s just say it was… interesting. It wasn’t exactly a masterpiece. More like a cautionary tale about the dangers of boredom and the lure of image manipulation. Did it work? Sort of. Was it ethical? Debatable. Was it a fun learning experience? Absolutely.

So, there you have it. My “big boob ph” adventure. A reminder that even the silliest projects can teach you something new about coding and the weird world of image processing. Now, if you’ll excuse me, I’m going to go delete all the evidence.